Often we mobile developers get asked a question, “Can we share code between Android and iOS app?”. After all much of the business logic remains the same regardless of platform we build for. Mobile developers ends up implementing much of exact same logic on each platform. Not only logic but, tests around that code is also duplicated. It is also challenging to ensure both apps implemented exact same logic. After all, if different developers worked on the platform, chances are logic is different causing each app to behave differently.

In this post, I will go over Kotlin Multiplatform as a solution for this problem. I will explain how Kotlin Multiplatform could help us having “common” code in one place shared between the two native apps. One of the main objective is that common code should be native to each platform and should have first class citizen support.

Problem Statement:

As an example for this use case, let’s assume that we are trying to implement an Analytics Event Logging framework. To keep things simple, let’s say the event name and property should be same on both platforms. An Event is a “common” thing for each platform. (Note: the issue could have been that each platform may have named their Event and property different, “button_click” vs “ButtonClick” etc)

In this example we will build a Kotlin Multiplatform solution that contains the common code shared between in Android and iOS app.

Settings up Android Project:

Let’s being by setting up a new Android project. Go through the new project wizard in the Android Studio and create a new Android project called “KotlinMPLogging”. Once complete, you should be able to start the app and see the “Hello World!” screen.

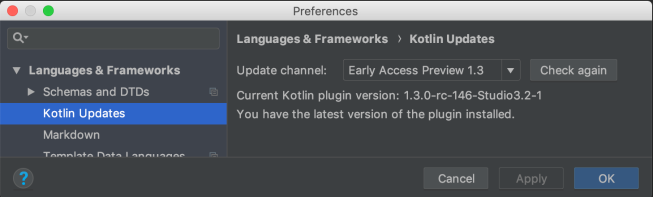

Switching to Kotlin 1.3:

At this point, let’s configure our project to use Kotlin 1.3, Let’s configure our IDE to use the Kotlin 1.3 plugin. To do so, go to the Settings, Cmd + , > Languages & Frameworks > Kotlin Updates and pick “Early Access Preview 1.3”

Next up, let’s update the build.gradle, of the main Project and update the kotlin version (rc-80 is the latest RC version as of this writing)

ext.kotlin_version = '1.3.0-rc-80'

Your, IDE will error out as it will not able to obtain the pre-release version of kotlin. To fix this, we would need to add maven url in the build script.

maven { url 'https://dl.bintray.com/kotlin/kotlin-eap' }

We will add this for both buildscript as well as allprojects so our app module is able to get the right version of std lib.

After these changes are made, your build.gradle should look like this:

| buildscript { | |

| ext.kotlin_version = '1.3.0-rc-80' | |

| repositories { | |

| google() | |

| jcenter() | |

| maven { url 'https://dl.bintray.com/kotlin/kotlin-eap' } | |

| } | |

| dependencies { | |

| classpath 'com.android.tools.build:gradle:3.2.1' | |

| classpath "org.jetbrains.kotlin:kotlin-gradle-plugin:$kotlin_version" | |

| } | |

| } | |

| allprojects { | |

| repositories { | |

| google() | |

| jcenter() | |

| maven { url 'https://dl.bintray.com/kotlin/kotlin-eap' } | |

| } | |

| } |

We should also update the gradle wrapper to 4.10+ as Kotlin Native plugin requires the newer version. To do so update distrubtionUrl in gradle-wrapper.properties file

distributionUrl=https\://services.gradle.org/distributions/gradle-4.10.2-all.zip

At this point, you should be able to successfully build and run the Android project. Nothing has changed so far expect for using Kotlin 1.3.

Setting up the “Common” Kotlin Multiplatform module:

Now the interesting part. It’s time to build the Kotlin Multiplatform portion. This will be shared between different platforms (iOS and Android). To keep things simple, let’s create a folder named common in current project folder.

The folder will be structured in following way:

common/src/commonMain: All the common code will be here

common/src/androidMain: Android specific code would live here, for this example we won’t have anything here.

common/src/iosMain: iOS specific code would live here, for this example we won’t have anything here.

Time to create build.gradle for this module (it will be inside “common” folder we created above).

| apply plugin: 'kotlin-multiplatform' | |

| kotlin { | |

| targets { | |

| final def iOSTarget = System.getenv('SDK_NAME')?.startsWith("iphoneos") \ | |

| ? presets.iosArm64 : presets.iosX64 | |

| fromPreset(iOSTarget, 'iOS') { | |

| compilations.main.outputKinds('FRAMEWORK') | |

| } | |

| fromPreset(presets.jvm, 'android') | |

| } | |

| sourceSets { | |

| commonMain.dependencies { | |

| api 'org.jetbrains.kotlin:kotlin-stdlib-common' | |

| } | |

| androidMain.dependencies { | |

| api 'org.jetbrains.kotlin:kotlin-stdlib' | |

| } | |

| } | |

| } | |

| // workaround for https://youtrack.jetbrains.com/issue/KT-27170 | |

| configurations { | |

| compileClasspath | |

| } |

Here, we apply the ‘kotlin-multiplatform‘ plugin. Source set dependencies are defined here. e.g. we use kotlin-std-common in our common source set.

In the commonMain, start by creating the Event interface (commonMain/kotlin/com/manijshrestha/kotlinmplogging/analytics).

| package com.manijshrestha.kotlinmplogging.analytics | |

| interface Event { | |

| fun eventName(): String | |

| fun eventProperties(): Map<String, String>? | |

| } |

To limit the complexity in this example, let’s create a AnalyticsManager interface. Idea is that Event gets reported by AnalyticsManager. Each platform will implement its own Manager and use the Event from the common code.

| package com.manijshrestha.kotlinmplogging.analytics | |

| interface AnalyticsManager { | |

| fun report(event: Event) | |

| } |

With this plumbing in place, we can now add real Event implementations. We will add 2 events.

ButtonClickEvent and ViewEvent

| package com.manijshrestha.kotlinmplogging.analytics | |

| data class ViewEvent(private val pageName: String) : Event { | |

| override fun eventName() = "Page_Viewed" | |

| override fun eventProperties() = mapOf( | |

| "page_name" to pageName | |

| ) | |

| } |

As you can see above, each of the class have event name defined, regardless of the platform (iOS or Android) these events will have same name. Also required parameters are defined in the class so each platform will need to provide those arguments.

We could do lot more here but to keep the scope of this post limited to common code, We will leave this here and move on to platform specific implementation.

Using Common in Android Project:

Now it’s time to utilize the common code in our Android project. To do so lets include the “common” module in settings.gradle file

include ':common'

Now, add “common” project as a dependency in our app’s build.gradle dependencies section.

implementation project(':common')

With above changes, we can now provide the implementation of the AnalyticsManager. We could implement it to send Fabric Answers event or Google Analytics or perhaps to any services that you may want to call. For now, we are going to log the event in logcat using android logger.

| package com.manijshrestha.kotlinmplogging | |

| import android.util.Log | |

| import com.manijshrestha.kotlinmplogging.analytics.AnalyticsManager | |

| import com.manijshrestha.kotlinmplogging.analytics.Event | |

| class AndroidAnalyticsManager : AnalyticsManager { | |

| override fun report(event: Event) { | |

| Log.d("AAM", "Interaction ${event.eventName()} happened with property ${event.eventProperties()}") | |

| } | |

| } |

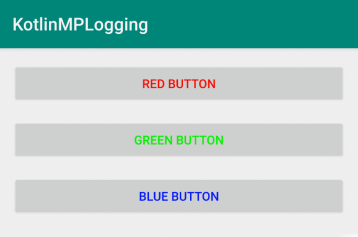

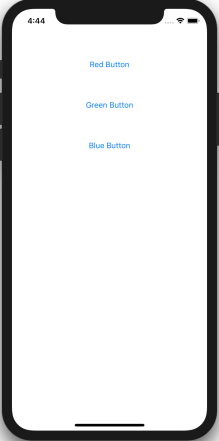

To see it in action, we are going to update the activity layout to have three buttons. We will send separate event on each button click.

| <?xml version="1.0" encoding="utf-8"?> | |

| <android.support.constraint.ConstraintLayout | |

| xmlns:android="http://schemas.android.com/apk/res/android" | |

| xmlns:tools="http://schemas.android.com/tools" | |

| xmlns:app="http://schemas.android.com/apk/res-auto" | |

| android:layout_width="match_parent" | |

| android:layout_height="match_parent" | |

| tools:context=".MainActivity"> | |

| <Button android:id="@+id/red_button" | |

| android:layout_width="0dp" | |

| android:layout_height="wrap_content" | |

| android:text="@string/red_button" | |

| android:layout_margin="16dp" | |

| android:textColor="#FF0000" | |

| android:onClick="onButtonClick" | |

| app:layout_constraintLeft_toLeftOf="parent" | |

| app:layout_constraintRight_toRightOf="parent" | |

| app:layout_constraintTop_toTopOf="parent"/> | |

| <Button android:id="@+id/green_button" | |

| android:layout_width="0dp" | |

| android:layout_height="wrap_content" | |

| android:text="@string/green_button" | |

| android:layout_margin="16dp" | |

| android:textColor="#00FF00" | |

| android:onClick="onButtonClick" | |

| app:layout_constraintLeft_toLeftOf="parent" | |

| app:layout_constraintRight_toRightOf="parent" | |

| app:layout_constraintTop_toBottomOf="@+id/red_button"/> | |

| <Button android:id="@+id/blue_button" | |

| android:layout_width="0dp" | |

| android:layout_height="wrap_content" | |

| android:text="@string/blue_button" | |

| android:layout_margin="16dp" | |

| android:textColor="#0000FF" | |

| android:onClick="onButtonClick" | |

| app:layout_constraintLeft_toLeftOf="parent" | |

| app:layout_constraintRight_toRightOf="parent" | |

| app:layout_constraintTop_toBottomOf="@+id/green_button"/> | |

| </android.support.constraint.ConstraintLayout> |

Here is the Activity where we will implement the reporting of the event.

| package com.manijshrestha.kotlinmplogging | |

| import android.os.Bundle | |

| import android.support.v7.app.AppCompatActivity | |

| import android.view.View | |

| import com.manijshrestha.kotlinmplogging.analytics.ButtonClickEvent | |

| import com.manijshrestha.kotlinmplogging.analytics.ViewEvent | |

| class MainActivity : AppCompatActivity() { | |

| private val analyticsManager = AndroidAnalyticsManager() | |

| override fun onCreate(savedInstanceState: Bundle?) { | |

| super.onCreate(savedInstanceState) | |

| setContentView(R.layout.activity_main) | |

| } | |

| override fun onResume() { | |

| super.onResume() | |

| analyticsManager.report(ViewEvent("Main Page")) | |

| } | |

| fun onButtonClick(view: View) { | |

| when (view.id) { | |

| R.id.red_button -> analyticsManager.report(ButtonClickEvent("Red")) | |

| R.id.green_button -> analyticsManager.report(ButtonClickEvent("Green")) | |

| R.id.blue_button -> analyticsManager.report(ButtonClickEvent("Blue")) | |

| } | |

| } | |

| } |

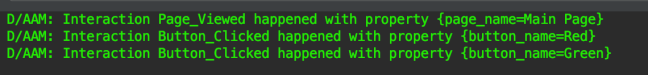

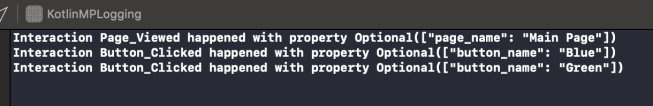

With these changes we can run the app and see it in action.

When the page is loaded, we are now reporting Page_Viewed event with the page name and as we tap on each button, we get Button_Clicked events reported (above, we can see Red and Green button click events).

With android side fully implemented, it is time to implement it in the iOS app.

Building common framework for iOS:

If you take a peek at the “common/build” folder, you can see that it generated the java class files for our Android app to consume. For iOS app we need to compile it as “framework“. Let’s get this by adding the following script in our build.gradle file of common module.

| task packForXCode(type: Sync) { | |

| final File frameworkDir = new File(buildDir, "xcode-frameworks") | |

| final String mode = System.getenv('CONFIGURATION')?.toUpperCase() ?: 'DEBUG' | |

| inputs.property "mode", mode | |

| dependsOn kotlin.targets.iOS.compilations.main.linkTaskName("FRAMEWORK", mode) | |

| from { kotlin.targets.iOS.compilations.main.getBinary("FRAMEWORK", mode).parentFile } | |

| into frameworkDir | |

| doLast { | |

| new File(frameworkDir, 'gradlew').with { | |

| text = "#!/bin/bash\nexport 'JAVA_HOME=${System.getProperty("java.home")}'\ncd '${rootProject.rootDir}'\n./gradlew \$@\n" | |

| setExecutable(true) | |

| } | |

| } | |

| } | |

| tasks.build.dependsOn packForXCode |

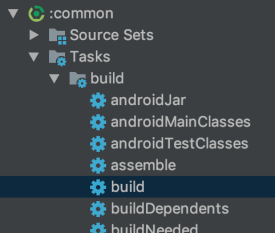

With following changes in place, run the ’build’ task.

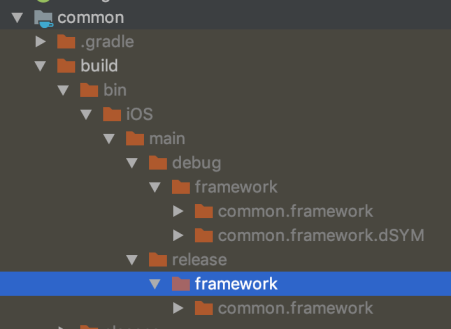

We should see the frameworks being generated for both release and debug.

Setting up iOS project:

Go through the new project setup wizard on Xcode and create a single view application.

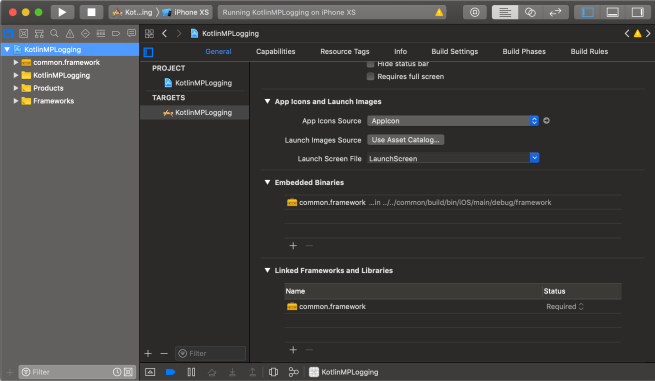

Now, add the framework that was build from above step in our project. To do this, go to General > Embedded Binaries +

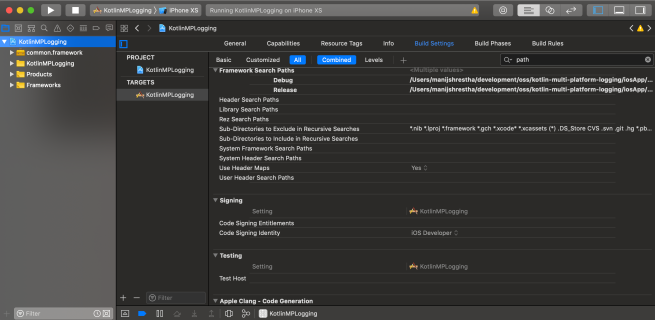

Also, add the Framework path by going into “Build Settings” Framework Search Paths:

With these changes, you should be able to compile and run the app without any issues.

At this stage, we are going to create the IosAnalyticsManger. Create IosAnalytiscManager.swift class in the project with implementation of your choice. For this demo we are going to print the Event detail in the console.

| import Foundation | |

| import common | |

| class IosAnalyticsManager : AnalyticsManager { | |

| func report(event: Event) { | |

| print("Interaction \(event.eventName()) happened with property \(event.eventProperties().debugDescription)") | |

| } | |

| } |

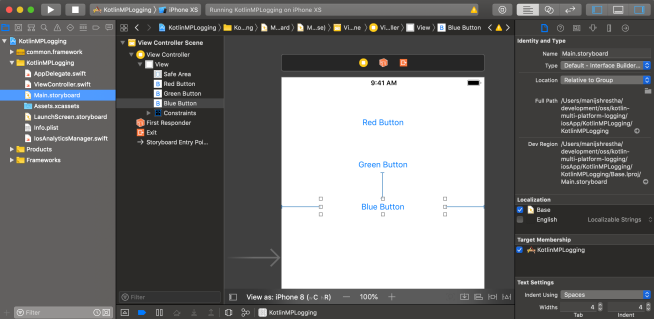

Similar to android app we are going to add 3 buttons in the storyboard and link the click action to our view controller.

We will link these buttons to our view controller.

| import UIKit | |

| import common | |

| class ViewController: UIViewController { | |

| let anatlyicsManager = IosAnalyticsManager() | |

| override func viewDidLoad() { | |

| super.viewDidLoad() | |

| // Report page is viewed | |

| anatlyicsManager.report(event: ViewEvent.init(pageName: "Main Page")) | |

| } | |

| @IBAction func redButtonClicked(_ sender: Any) { | |

| anatlyicsManager.report(event: ButtonClickEvent.init(buttonName: "Red")) | |

| } | |

| @IBAction func greenButtonClicked(_ sender: Any) { | |

| anatlyicsManager.report(event: ButtonClickEvent.init(buttonName: "Green")) | |

| } | |

| @IBAction func blueButtonClicked(_ sender: Any) { | |

| anatlyicsManager.report(event: ButtonClickEvent.init(buttonName: "Blue")) | |

| } | |

| } |

Now, run the app and we should be able to see the app in action:

As the page is loaded, Page View Event is reported, similarly as we tap on each button the Button Clicked Event is reported. In our case, we can see the output in the console log.

Summary:

To wrap it all up, we built “common” component using Kotlin Multiplatform plugin. We defined Event and AnalyticsManager there. We built Android app that used implemented the interface defined in the common. We then used it to repot the event. Similarly, we built iOS app, we implemented the protocol defined in common. We then used the exact same Event class defined in common to report the event.

This is just scratching the surface of whats possible with Kotlin Multiplatform. I hope this shows the potential of whats possible. I hope you found this post helpful. Until next time, adios amigos.

Sample code for this project is found in github: https://github.com/manijshrestha/kotlin-multi-platform-logging

References:

Kotlin Multiplatform Project: iOS and Android